© 2025 Fractional AI

Request a Consult

Your consultation request has been received

Oops! Something went wrong while submitting the form.

Beghou partnered with Fractional AI to build an intelligent copilot that automates complex configuration workflows in Beghou Arc — the company’s flagship life sciences data platform. The AI system dramatically reduces engineering time for new client implementations, completing configuration tasks up to 10× faster, while streamlining onboarding and creating a foundation for scalable platform delivery.

"Fractional AI was instrumental in helping us streamline engineering workflows and deliver tailored, tech-enabled solutions to clients faster – while maintaining rigor and excellence Beghou is known for. Now our team can focus more of its time on innovation and solving complex client challenges."

- Dan Cardinal, CTO, Beghou

Beghou is a life sciences consulting and technology firm helping biopharma organizations build strong data and technology foundations, drive operational excellence, and engage providers, payers, and patients.

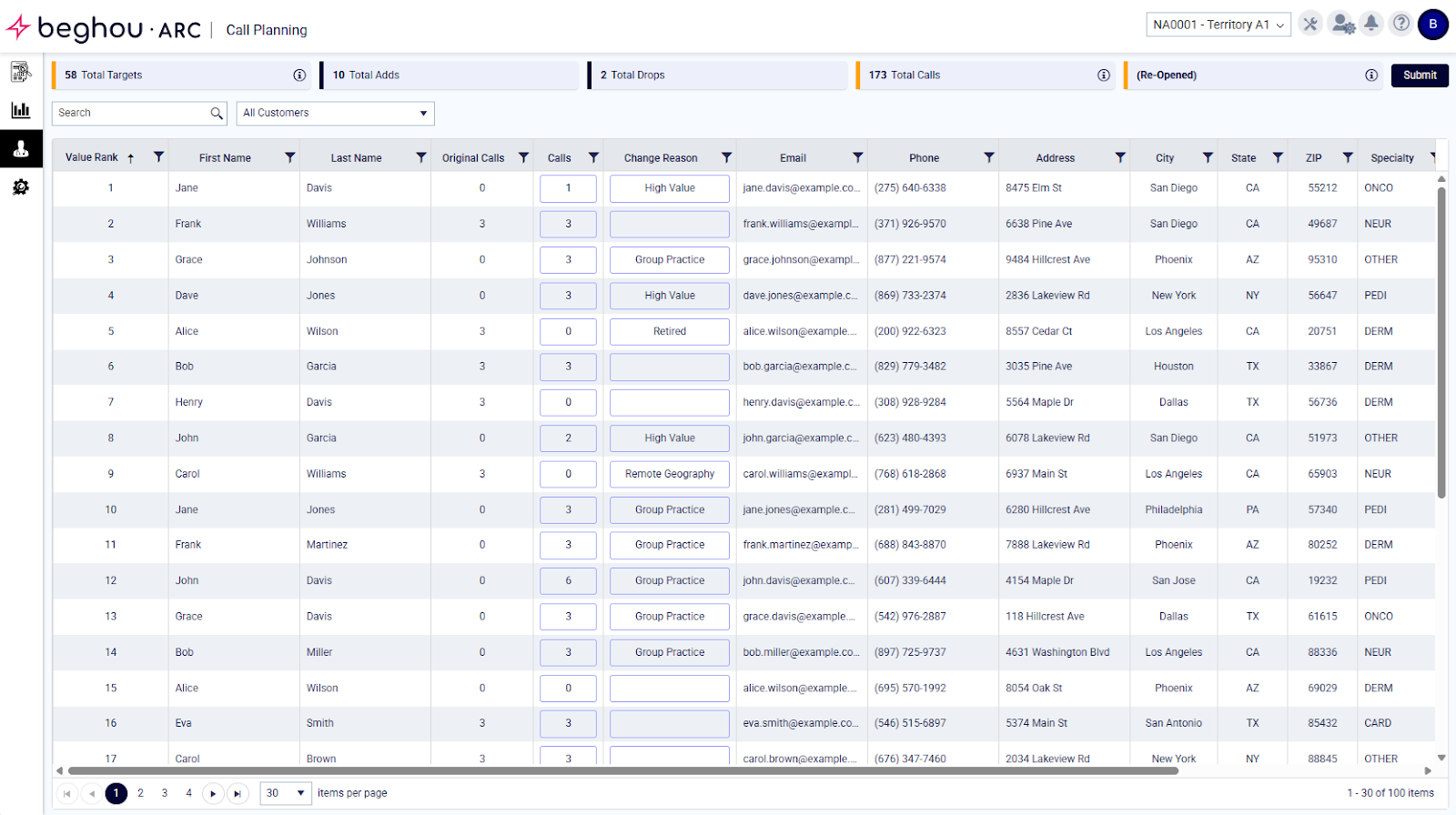

One of Beghou’s core offerings is Beghou Arc, a connected, flexible commercialization platform that helps life sciences clients unify and activate their data, tech, and execution across commercial planning, field deployment and operations, incentive compensation, customer engagement, and decision intelligence. Each Arc instance is uniquely configured for the client’s business logic — an approach that delivers precision and flexibility but has historically demanded deep engineering expertise and extensive manual effort.

Each Beghou Arc site is programmatically generated from hundreds of interrelated metadata tables — more than 100 in total, spanning over 1,500 columns. While this design allows precise customization, it also introduces steep complexity for engineers configuring new sites or updating existing ones.

Even with Beghou’s natural-language configuration UI, site setup and modification required substantial manual work. Adding something as simple as a search bar to a “Manager Review” page could require joining multiple metadata tables, tracking foreign keys across UIGrids, UIFilterCollections, and UIDataFields, and verifying deprecated fields.

To maintain Beghou’s delivery standards while scaling its client base, the team sought a way to:

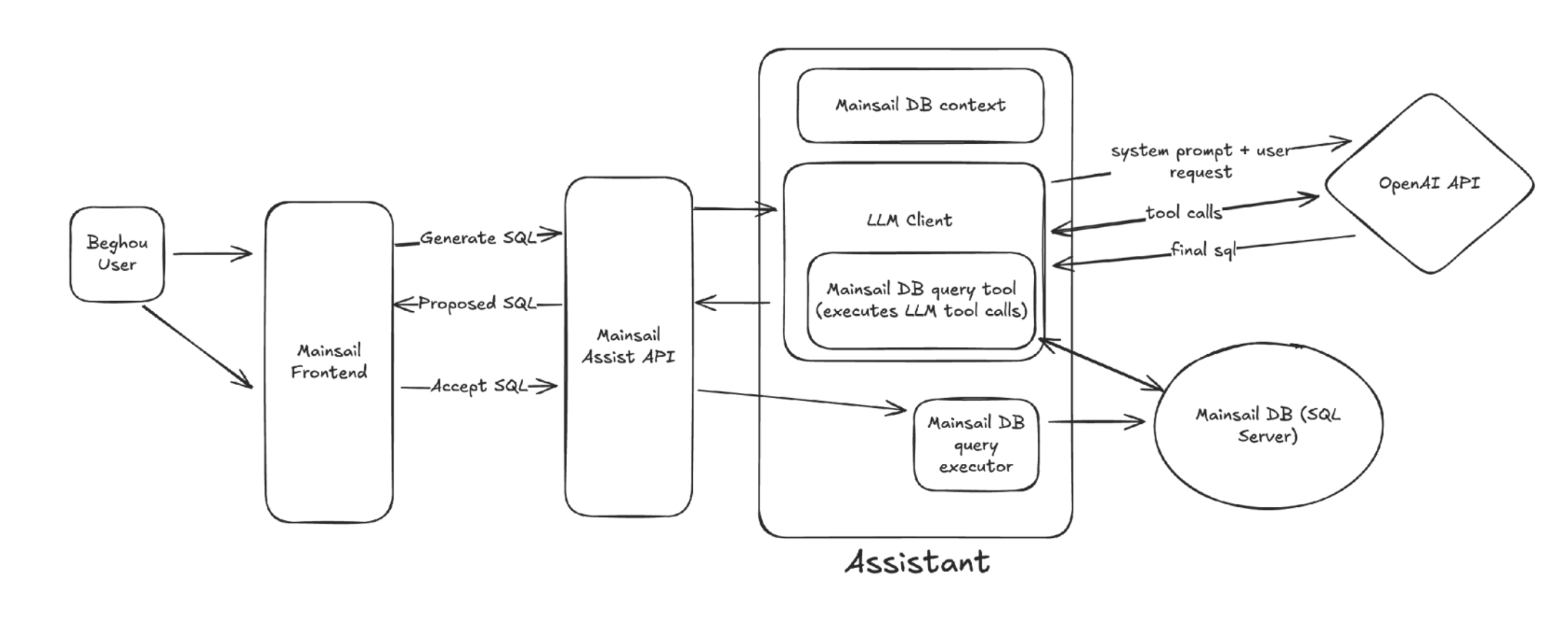

Fractional and Beghou jointly developed an AI system that could translate natural-language configuration requests into validated SQL proposals — all without executing any changes automatically.

The copilot operates as a backend service integrated with Beghou Arc’s UI. Engineers or configurators can issue commands such as:

Each request triggers a reasoning workflow that interprets the user’s intent, inspects the current site configuration through read-only database queries, and generates a proposed SQL script. The engineer remains fully in control, reviewing every proposed change before execution.

This approach preserves expert oversight while automating the most repetitive and error-prone aspects of Arc configuration.

The Beghou Arc AI Copilot now enables engineers to complete configuration tasks in minutes instead of hours or days, delivering measurable improvements across the board:

At its core, the system is powered by a FastAPI backend orchestrating a GPT-5-mini-based agent optimized for low-latency tool use. The agent operates within strict boundaries:

Every session is stored with full conversational history to maintain context and auditability.

Evaluating SQL-generating agents presents unique challenges: correctness is often context-specific, and small syntax differences can mask logical errors. To achieve deterministic testing, Fractional designed a custom containerized evaluation environment:

The evals serve as both QA and continuous improvement infrastructure, providing immediate feedback when prompt, reasoning, or model changes affect reliability.

Through extensive experimentation, the team surfaced several key observations:

Together, these patterns formed a repeatable methodology for building safe, efficient, and explainable AI system.