© 2025 Fractional AI

Request a Consult

Your consultation request has been received

Oops! Something went wrong while submitting the form.

Fractional AI built a research agent system that autonomously surfaces and validates emerging market trends. Using structured debate between reasoning agents, it delivers data-backed, decision-ready reports in under 35 minutes for about $3 – laying the groundwork to multiply Analyst throughput and speed up proactive trend discovery for a PE-backed intelligence platform.

Generating high-quality insights from this market intelligence platform's data has traditionally required Senior Analysts with significant experience. Even then, Analysts were limited to primarily responding to client-initiated questions. Meanwhile, important signals – internet trends, consumer sentiment, emerging behaviors – often precede measurable sales shifts, but there was no scalable way to detect or validate them.

The company wanted to flip the model: proactively identify trends their clients hadn't yet considered, then validate those signals using proprietary point-of-sale data.

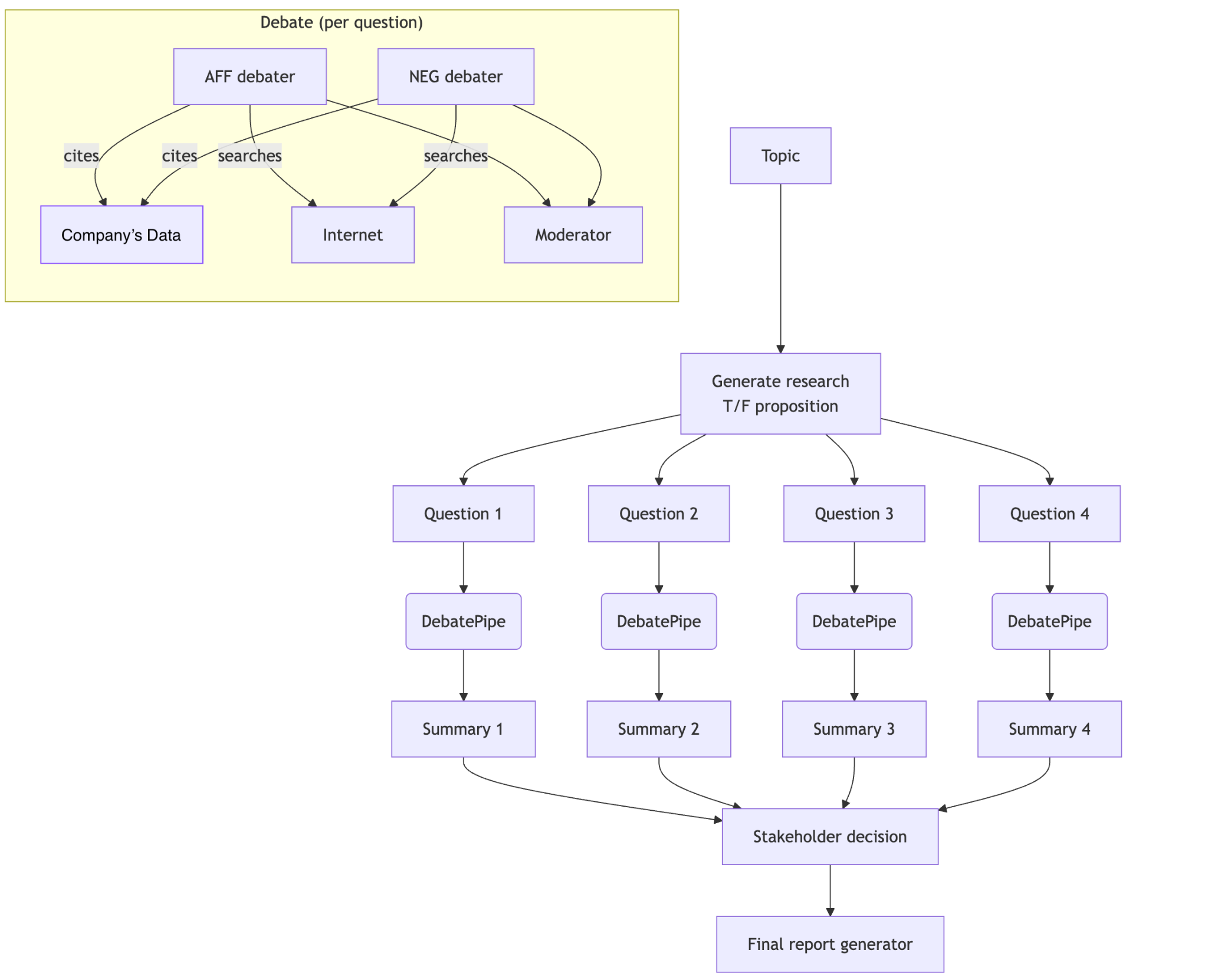

Fractional AI built a modular research agent system designed to mimic the workflow of an experienced Analyst. The system operates in two stages: topic generation and structured evaluation.

In the first stage, agents generate candidate "areas of concern" by scanning public web data for emerging trends tied to a specific brand or category (e.g., Emerging Threat of GLP-1 Therapies to Calorie-Sweetened Soda Demand). These agents surface both direct and adjacent trends that could impact a client’s performance. These topics are selected for their relevance, novelty, and potential impact, covering both direct threats and adjacent opportunities.

In the second stage, each topic is analyzed through a multi-agent debate: two AI agents (affirmative and negative) argue for and against the trend’s importance using a blend of sales data and external web evidence. This reasoning-focused model allows each side to make informed arguments based on data.

The output is then evaluated by a third agent simulating a brand executive, who reviews the arguments and renders a final decision on the topic’s relevance within a 12–24 month business horizon. Each finalized report summarizes the decision and rationale in clear language, suitable for delivery to clients.

This trend report agent sets the foundation to shift from reactive analytics to forward-looking and insight-driven consulting.

Key outcomes:

Analysts do two jobs – find evidence and make an informed decision. LLMs often blur these tasks, steering research toward their own preferred outcome. The architecture for this project separates the roles and uses intentional bias – pitting pro and con researchers against each other – so a neutral judge decides based on briefs, not self-justified searches.

The critical design choice was not just modularity, but limiting each agent’s scope. When one agent both gathers evidence and renders judgment, its research naturally drifts toward supporting its own view. This system inverts that tendency: one agent is tasked to argue “this matters,” another to argue “it doesn’t,” and a third to decide. The judge reads the briefs without running its own tools, avoiding feedback loops and producing more stable outcomes.

The system is a set of composable agent loops – topic generation, proposition evaluation, debate orchestration, stakeholder simulation – built with OpenAI’s Agent SDK. Tool access is role-scoped: research agents can search the web and query sales data; the judge cannot. This keeps research directional but judgment conservative and tool-independent. Modularity also allows updates to any loop without disturbing the rest.

Because the core question, “does this matter to a brand executive in the next 12–24 months?”, is inherently subjective, we focused evaluation on reliability and quality rather than a single accuracy score. Despite the ambiguity, we used structured checks at each stage to improve the system:

Performance tuning focused on cost, quality, and latency. In production, a full report took 25–35 minutes with o4-mini at about $3 in compute. Quality was strong: ~80% of report pairs reached the same verdict on re-run. The adversarial research + independent adjudication pattern drove that consistency by keeping the judge out of the evidence-gathering process.

This behind-the-scenes architecture created a repeatable, extensible system for trend discovery and validation – paving the way for broader productization.